CoinMarketCap LangGraph JS

Video Link: https://youtu.be/faosBOaERnU

GitHub Repository: https://github.com/Ashot72/CoinMarketCap-LangGraph-JS

LangGraph.js is a library for building stateful, multi-actor applications with LLMs, used to create agent and multi-agent workflows. Compared to other

LLM frameworks, it offers these core benefits; cycles, controllability, and persistence.

I have created an application where you can ask questions about cryptocurrencies to an LLM and receive replies. You can even purchase them (which will be discussed).

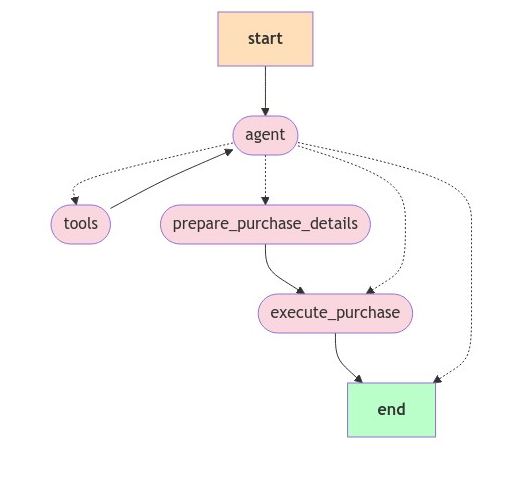

Figure 1

This is the LangGraph workflow we created.

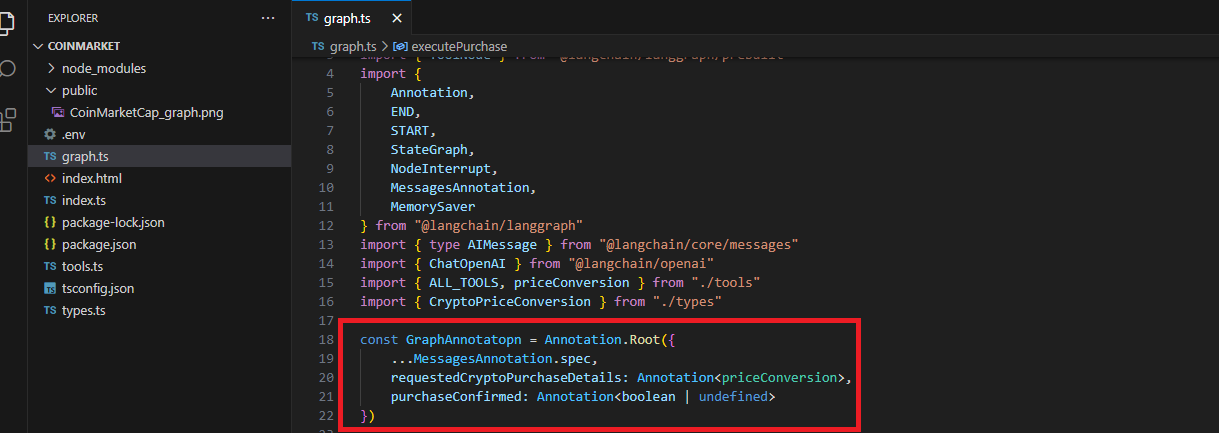

Figure 2

We define the state of our application using annotations, which is the recommended way to define the graph state for StateGraph graphs. We have also

specified our own properties, requestedCryptoPurchaseDetails and purchaseConfirmed.

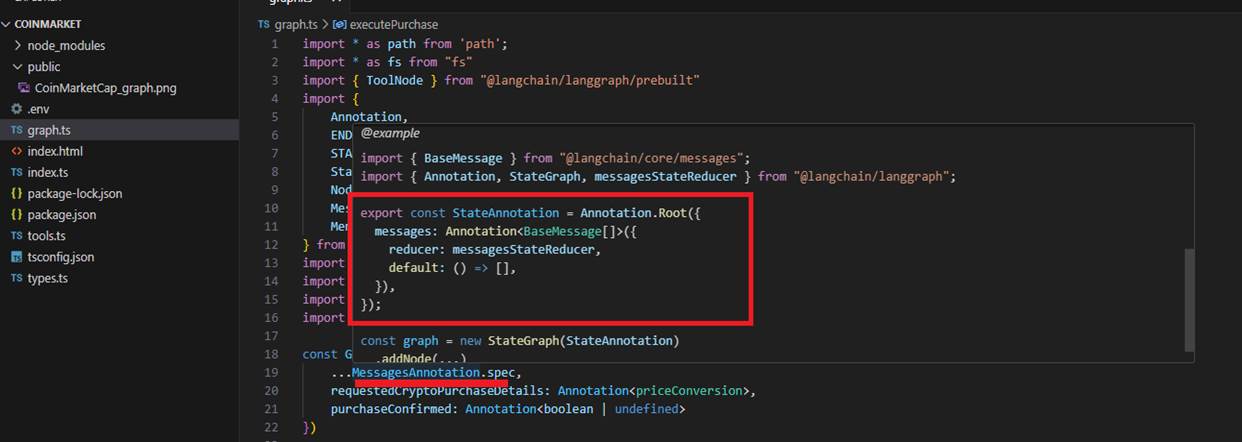

Figure 3

If we hover over the MessageAnnotation, we can see that the reducer function defines how new values are combined with the existing state, and the default function

provides an initial value for the channel.

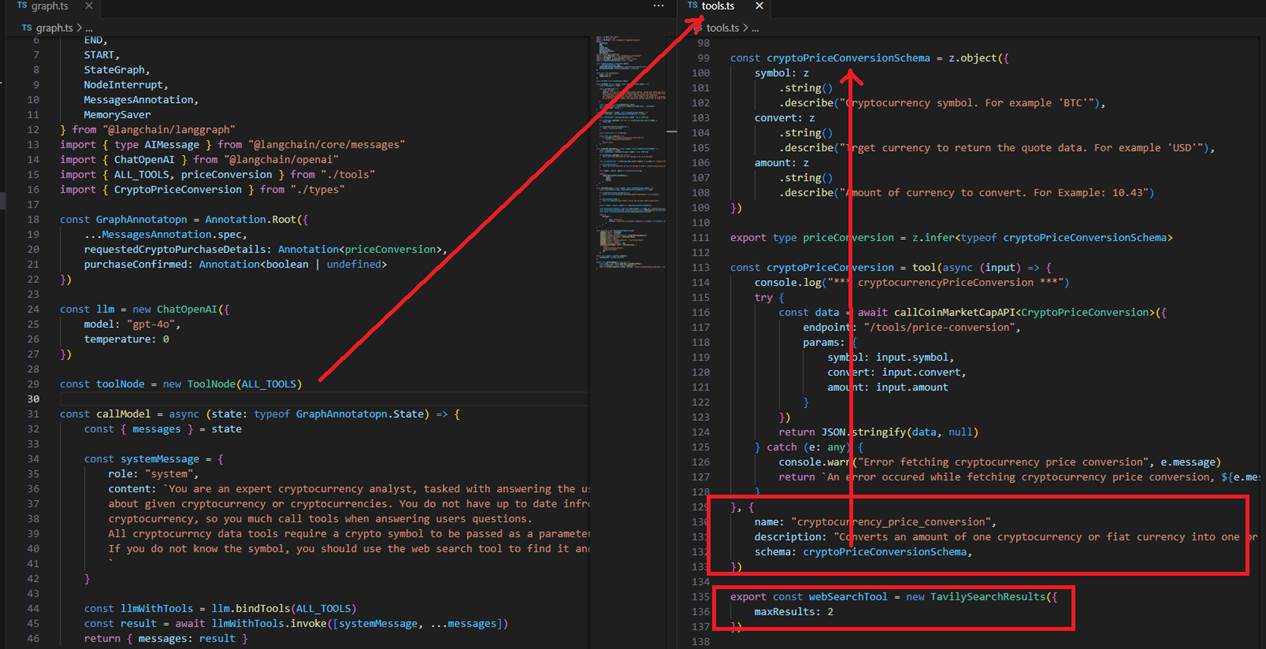

Figure 4

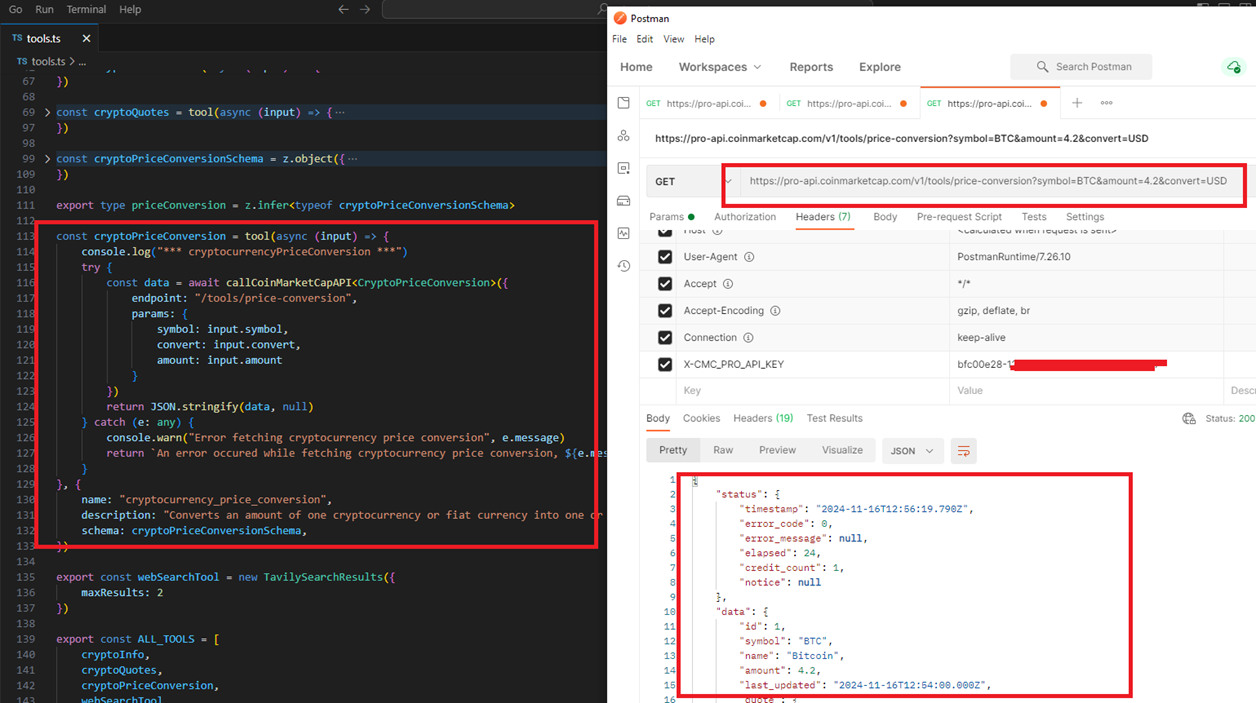

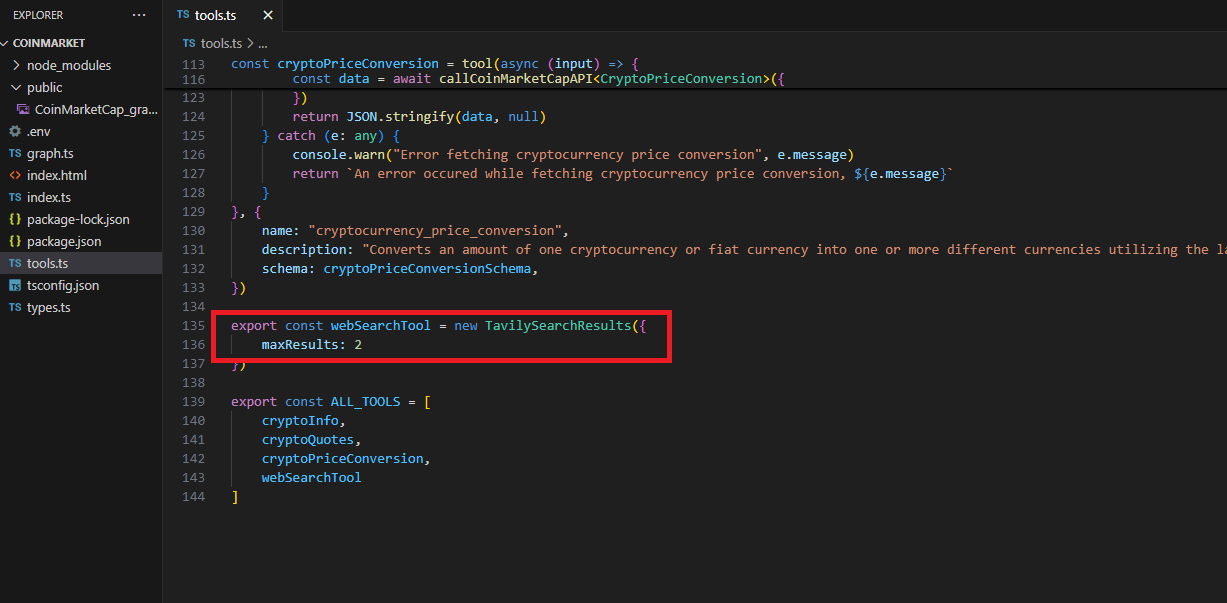

When constructing an agent, we need to provide a list of tools that we can use. While LangChain includes some prebuilt tools, it is often more useful to use tools that implement

custom logic. In our app, we use the built-in Tavily Search tool to search the web and our custom tools, which we created based on a Zod schema.

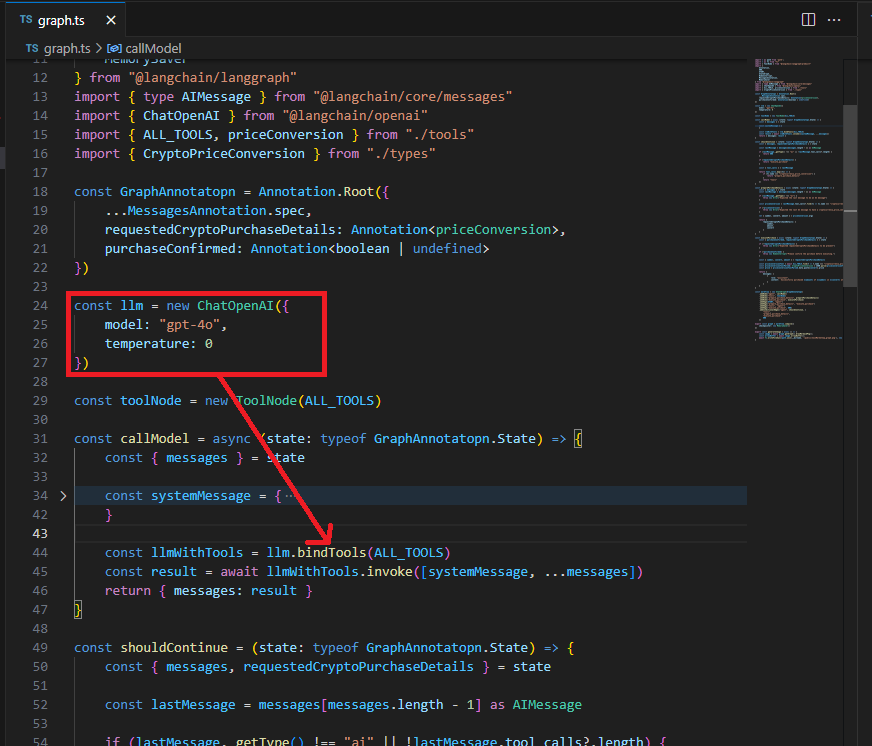

Figure 5

Most importantly, we bind the tools to our LLM.

Figure 6

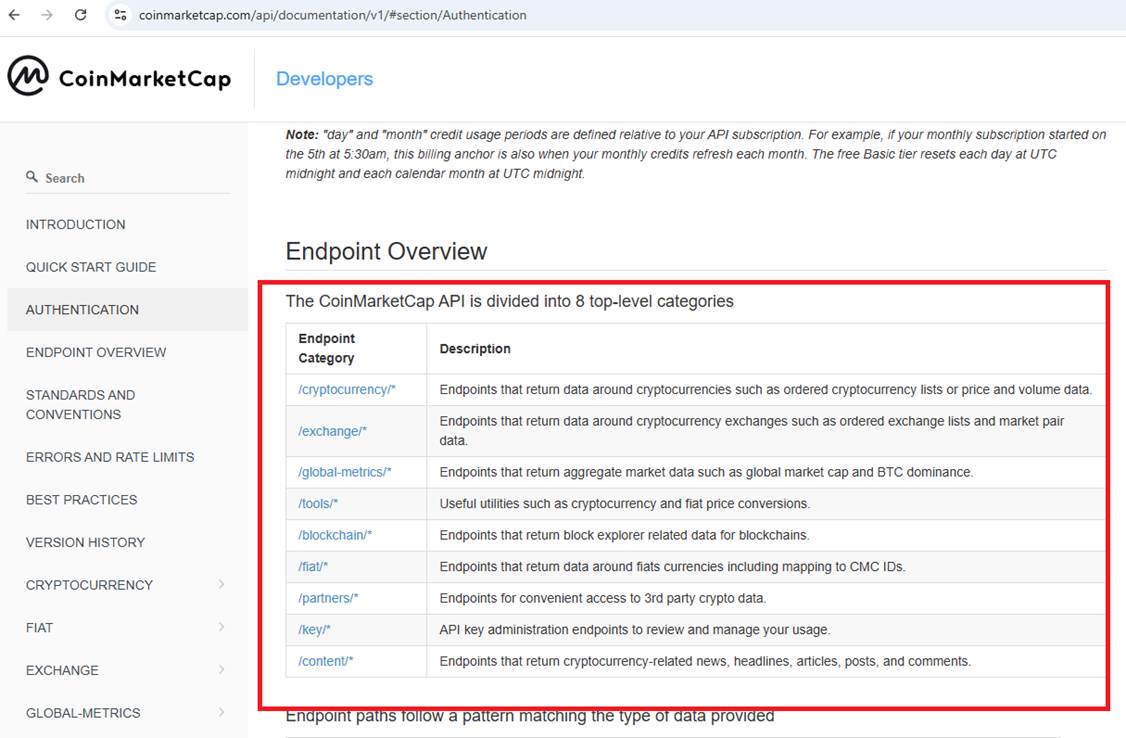

We built our custom tools based on the CoinMarketCap API.

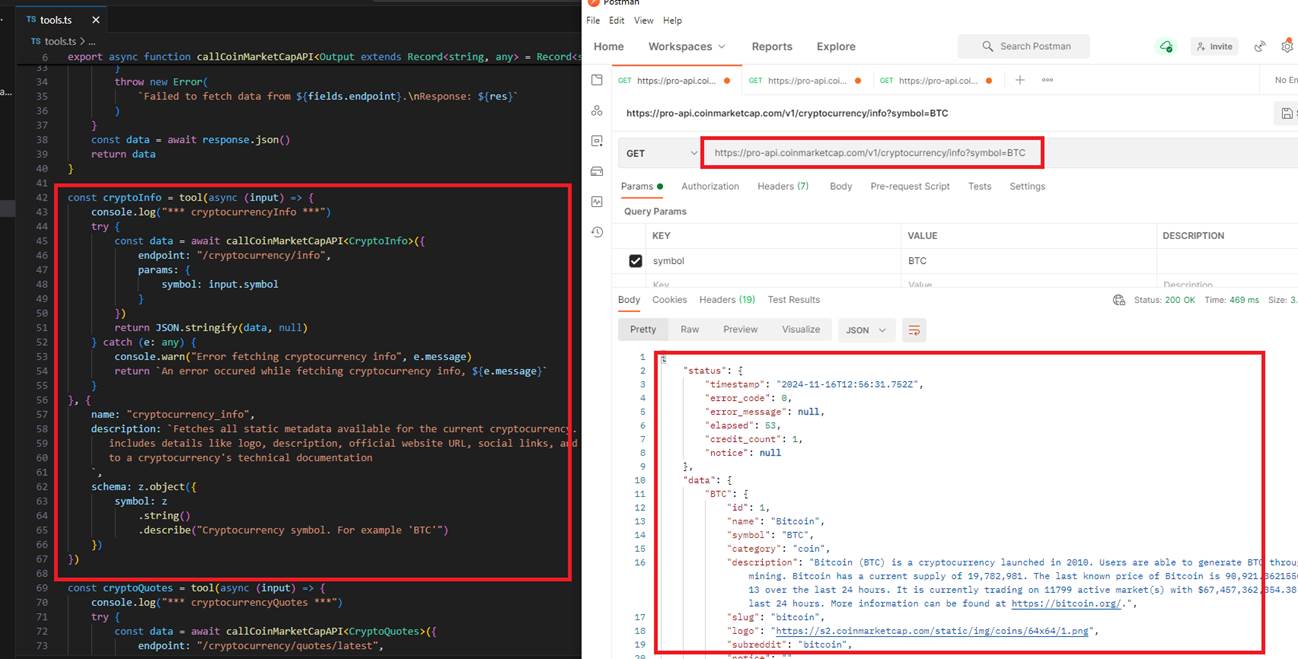

Figure 7

The first tool is the cryptocurrency info tool, where we pass the symbol, such as BTC.

Figure 8

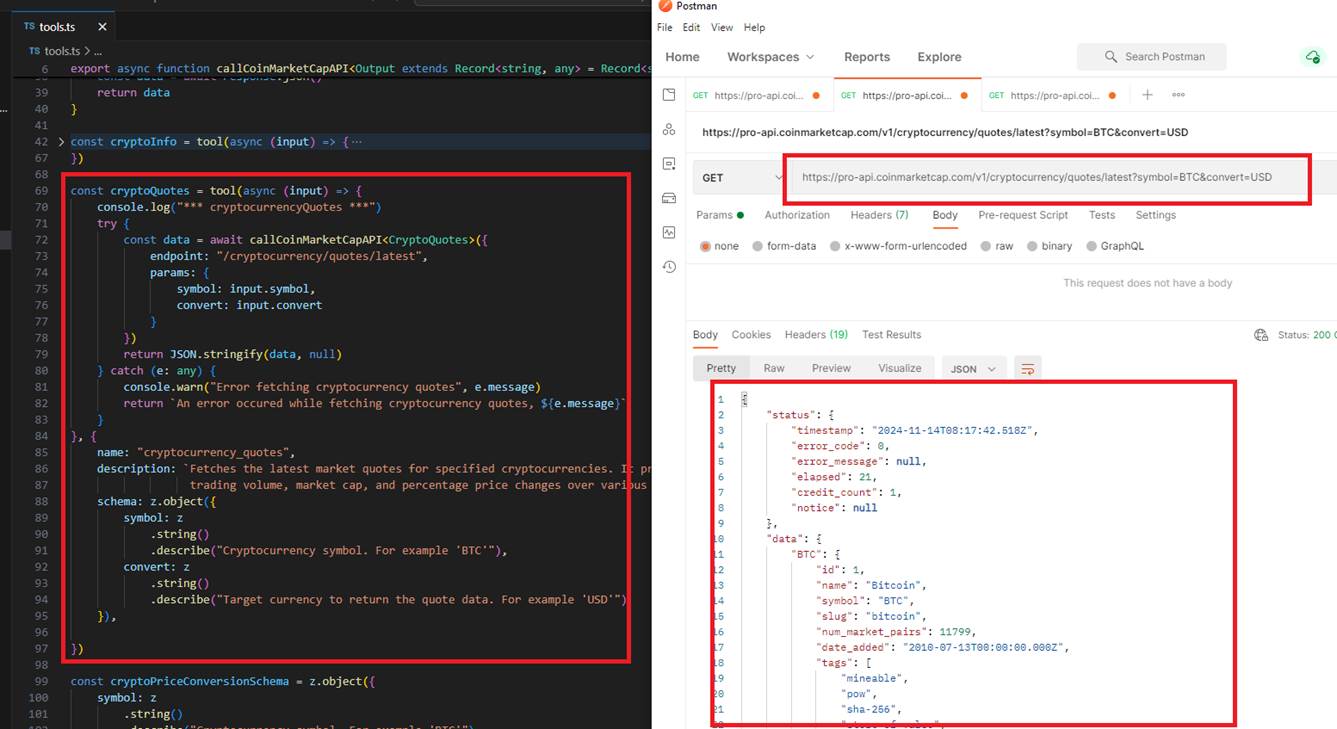

The second tool is the cryptoQuotes.

Figure 9

The third tool is the price conversion tool, where we convert, for example, BTC to USD by specifying an amount.

Figure 19

The Tavily Search tool is used to search the web. When you ask the question, what is ethery? by specifying an approximate cryptocurrency name, it uses the tool to search the web.

Figure 20

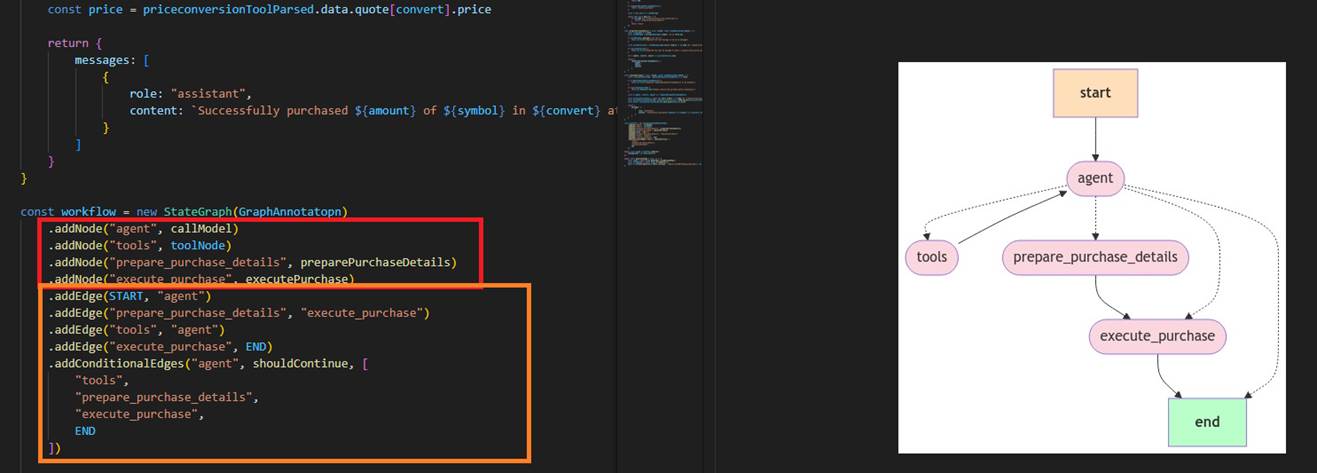

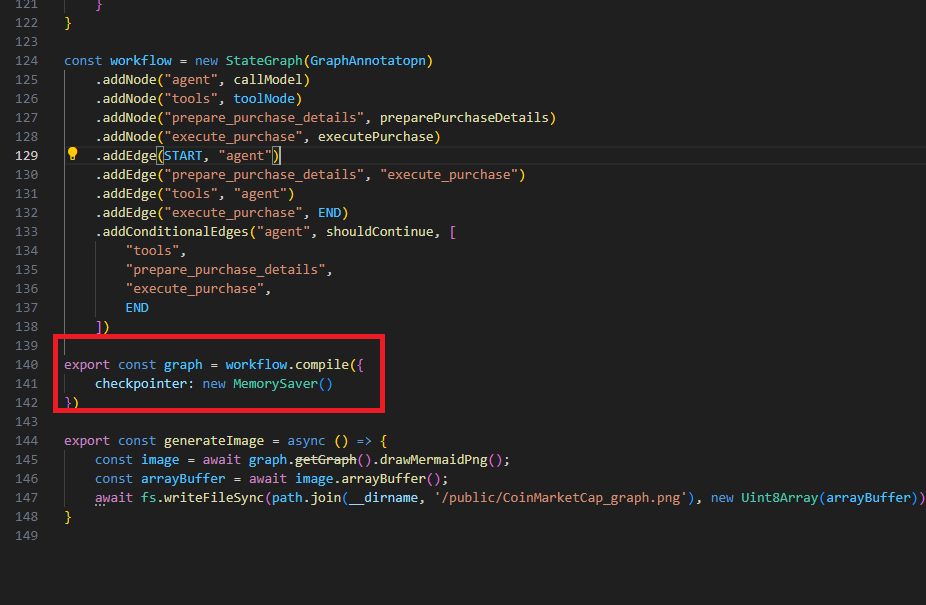

In LangGraph, nodes and edges are key components of the framework used to design workflows, typically for managing tasks and interactions with large language models (LLMs)

and other agents. A node in LangGraph represents a specific computational task or operation, serving as the fundamental unit of work in a workflow. An edge in LangGraph

represents the connection or flow between two nodes, determining the order of execution and how data is passed between them.

Figure 21

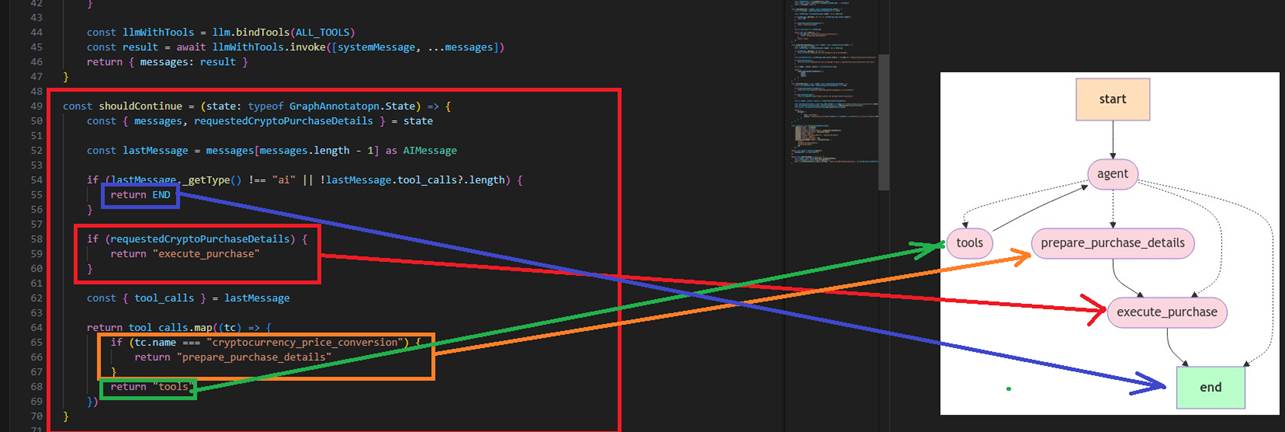

In LangGraph, conditional edges allow for dynamic routing within a graph based on the outcome of preceding node's operation. This enables decision-making

within workflows, where the flow of execution can change depending on certain conditions.

In our app, if there is no tooling information, we END the flow. We call the execute_purchase node when we have the requestedCryptoPurchaseDetails info.

If the cryptocurrency price conversion tool is used, we call the prepare_purchase_details node; otherwise, we just call the tools.

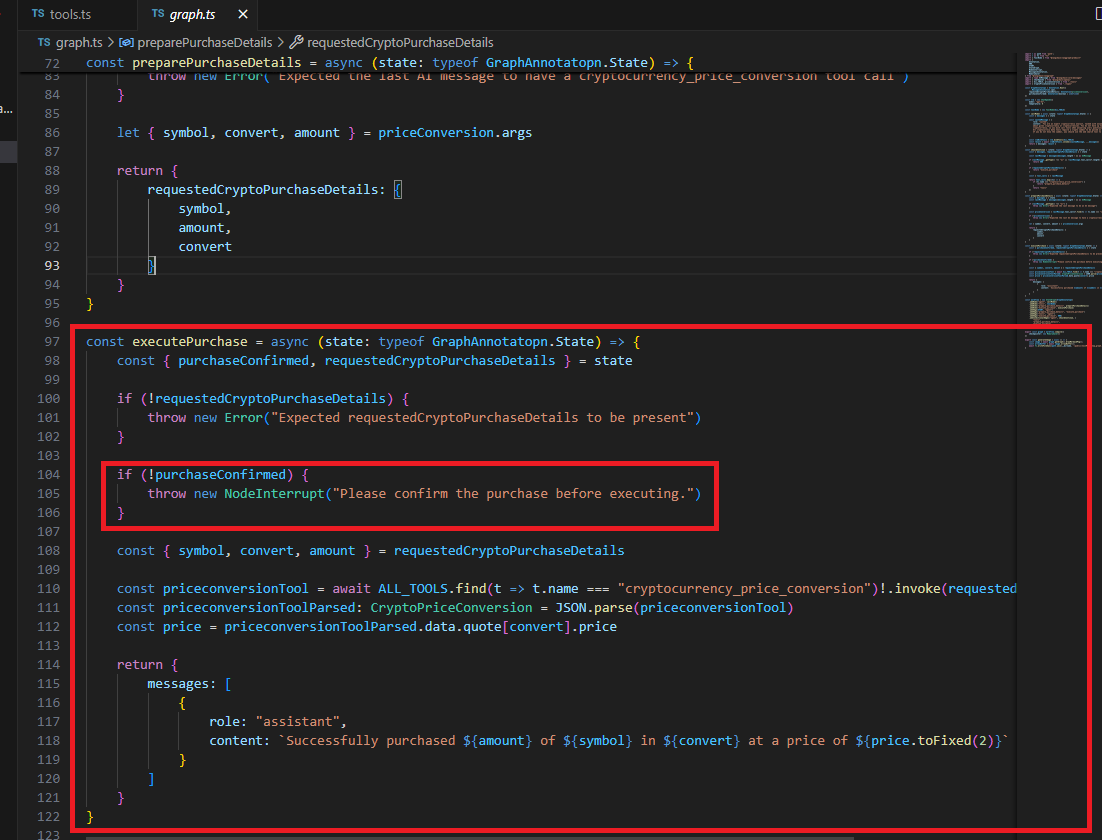

Figure 22

In the execute_purchase node if purchaseConfirmed state is not defined then we throw NodeInterupt.

In LangGraph, a dynamic interrupt refers to a mechanism that allows the graph workflow to be interrupted at runtime based on certain conditions or events within a node's execution.

It enables interruptions to occur during a node's execution when specific conditions are met. This is typically implemented by raising a special exception, such as NodeInterrupt, inside a node.

This feature can be especially useful in human-in-the-loop (HITL) applications, where human intervention is required before proceeding with certain tasks. By dynamically interrupting the

workflow, we can pause execution, review the current state, and decide whether to resume, adjust the process, or terminate it.

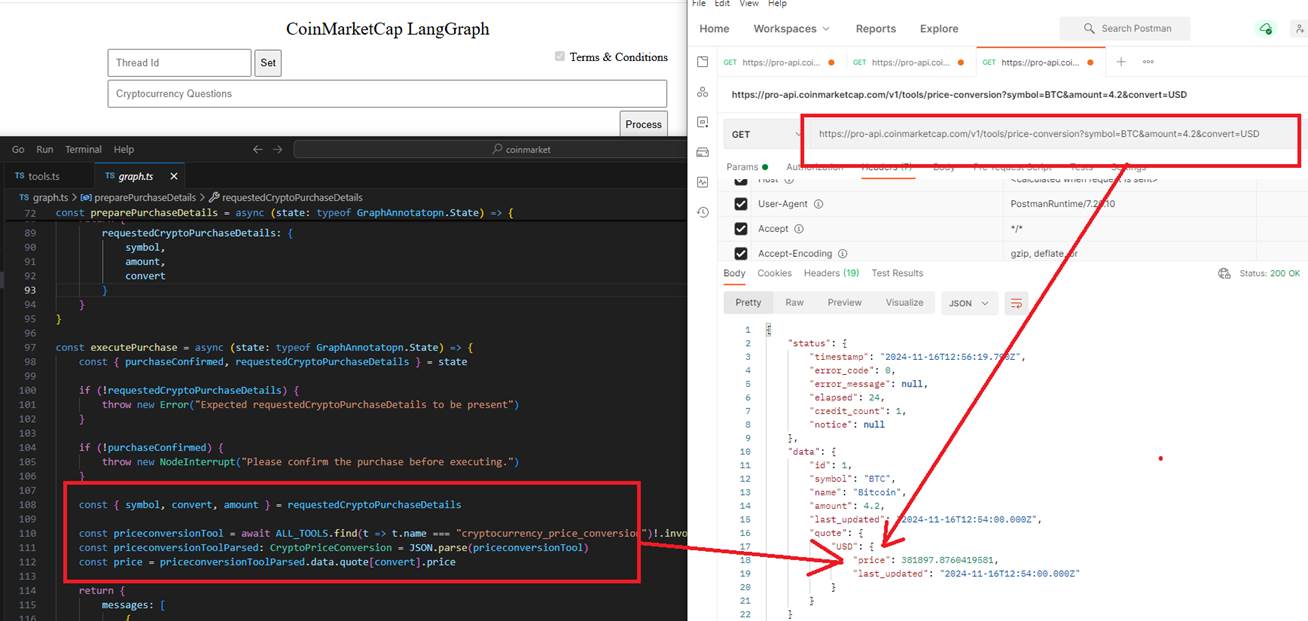

Figure 14

When the Terms & Conditions is checked, the execute_purchase node is called, where we directly call the price conversion tool to get the price by passing the necessary

information that we have already received.

Figure 15

In LangGraph, checkpointers and memory savers are used to manage the persistence of workflows and handle the recovery of

graph states across different stages of execution.

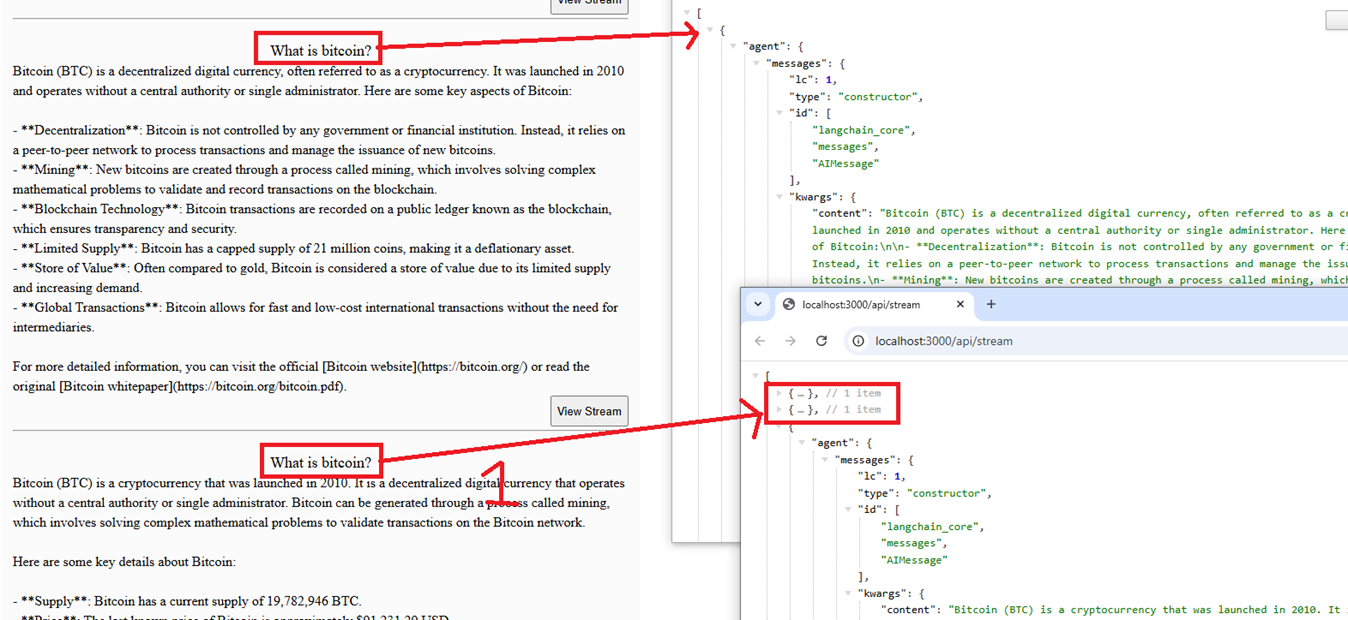

Figure 16

In the video, you can see that when I asked the same question a second time, What is bitcoin? it served the answer directly from memory without interfering with the model or tooling.

Figure 17

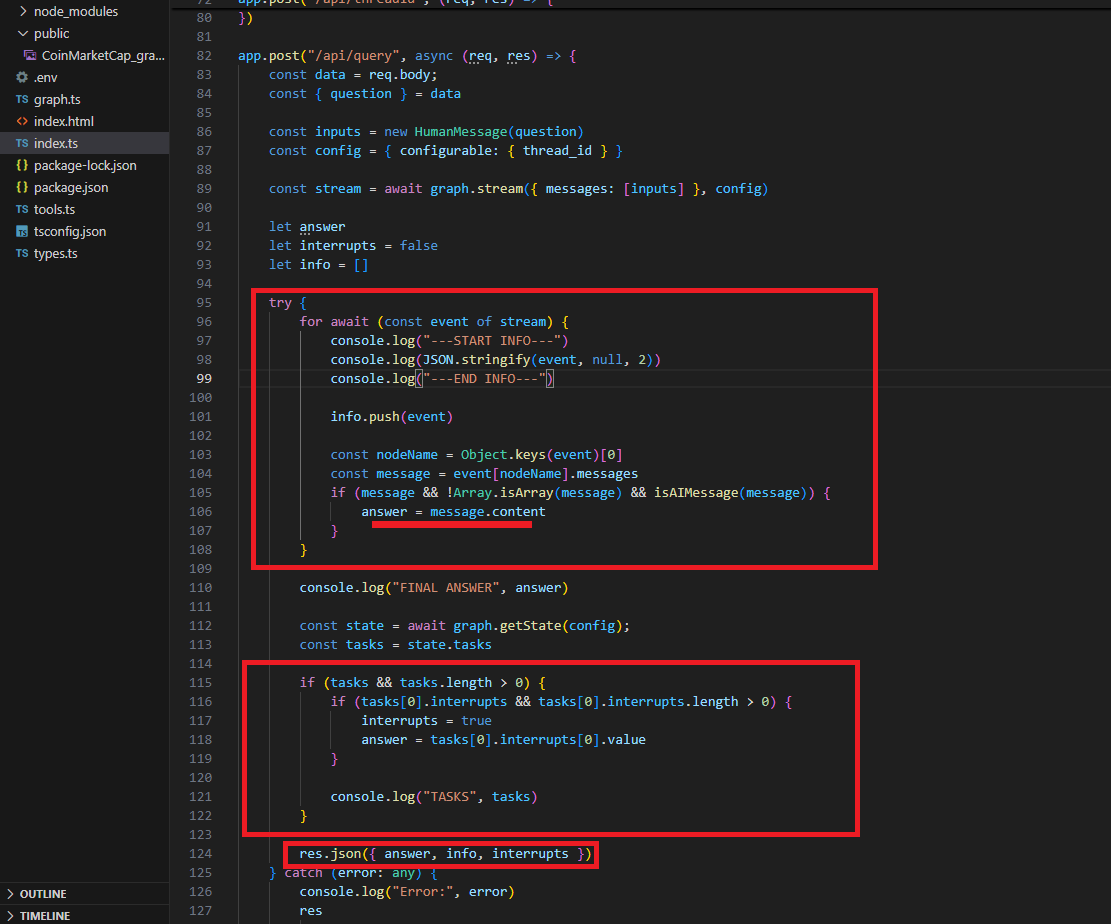

When we ask a question, we call the /api/query endpoint. We pass the question as a human message and get the detailed information by streaming it to display on

the front end when clicking the View Stream button. If there is a node interruption, such as when the Terms & Conditions is not checked, we pass the

interrupting message, which can be extracted from the task.

Figure 18

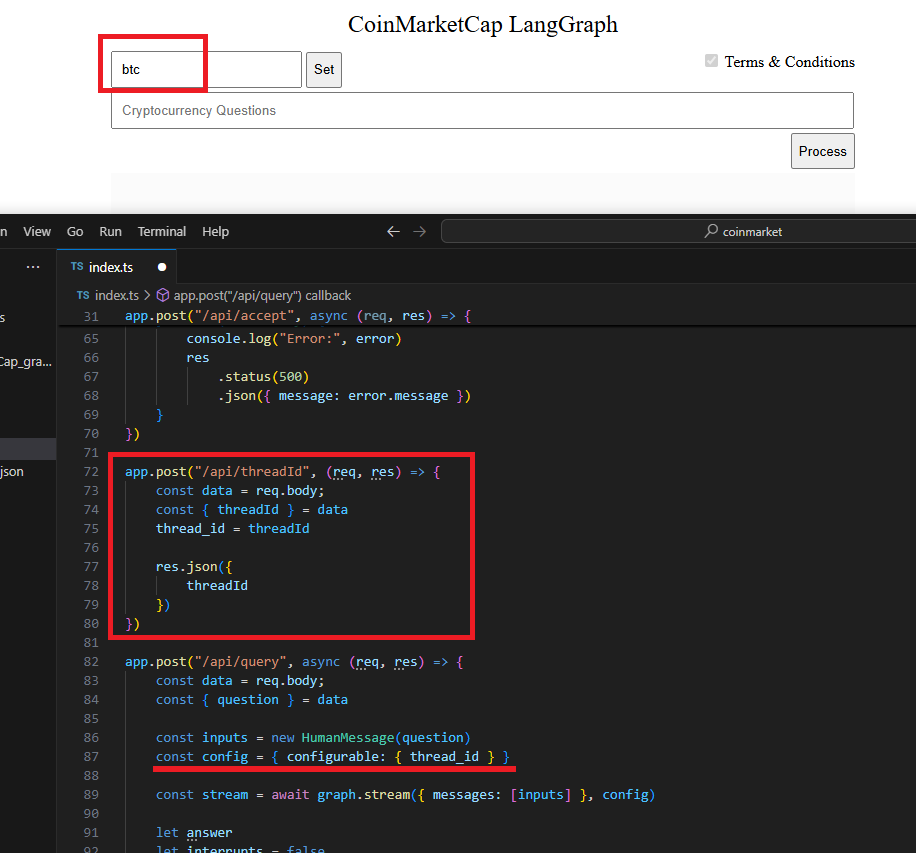

We set thread Id before asking a question.

In LangGraph, the thread ID is a unique identifier used to track a specific interaction or conversation flow within the graph. This ID is crucial for maintaining the context of interactions,

especially in scenarios involving multiple user sessions or conversations. The thread ID helps to manage and persist the state of the conversation, enabling features like memory

retention, message tracking, and checkpoints.

Figure 19

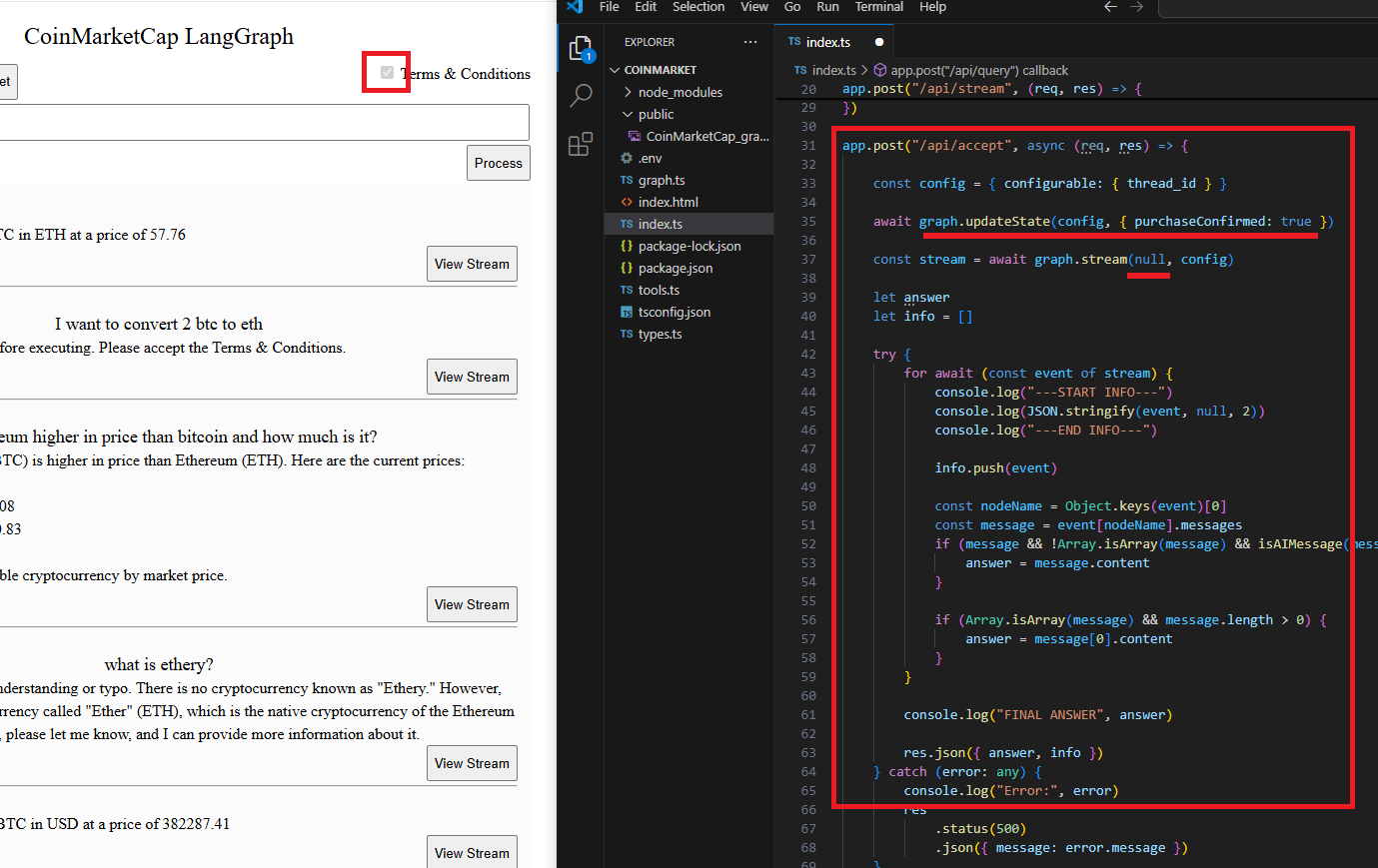

When the Terms & Conditions is checked, the /api/accept endpoint is called, where we update the graph by setting the purchaseConfirmed state to true.

The purchase is for demo purposes, as you cannot actually buy a crypto coin from CoinMarketCap. In applications, usually, we allow users to perform

actions once they are authenticated (logged in). The Terms & Conditions is a showcase in this application.

Figure 20

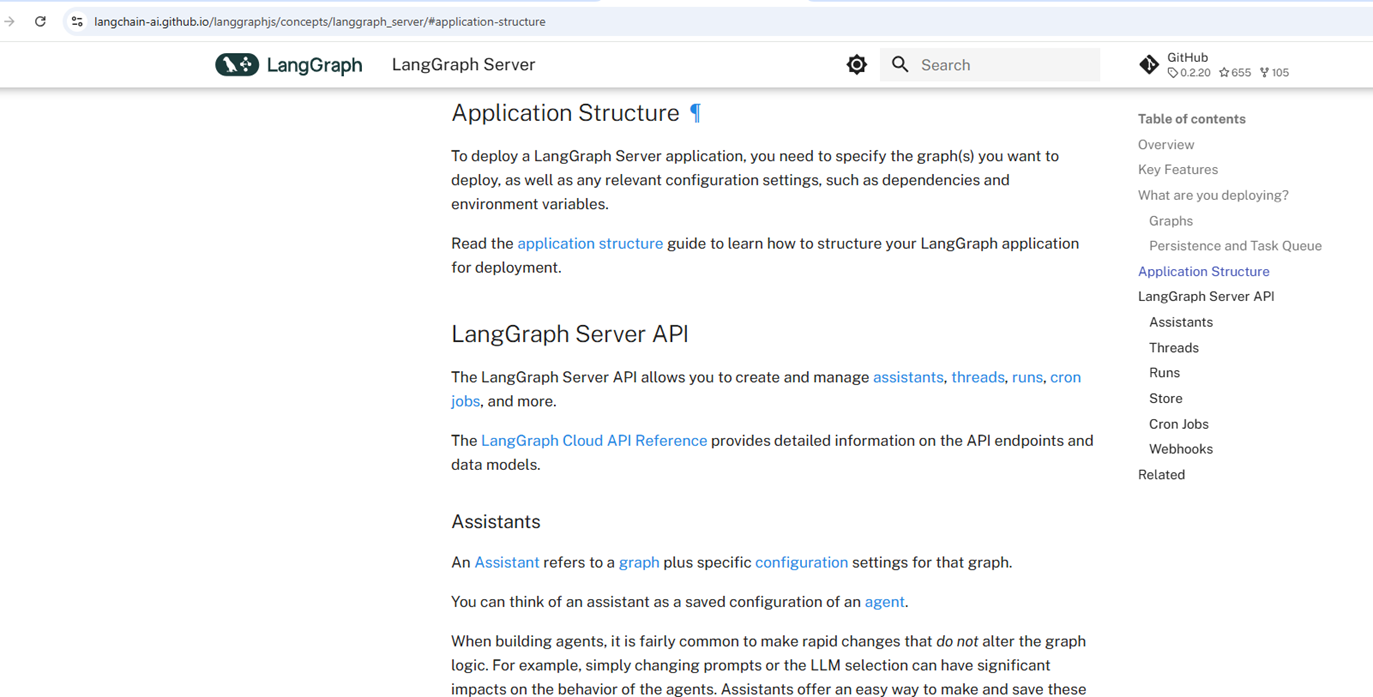

When you want to deploy your app to the LangGraph Server, you may use the LangGraph Server SDK instead. In our app, we do not intend to deploy it on the server.

You can read more about it.

Figure 21

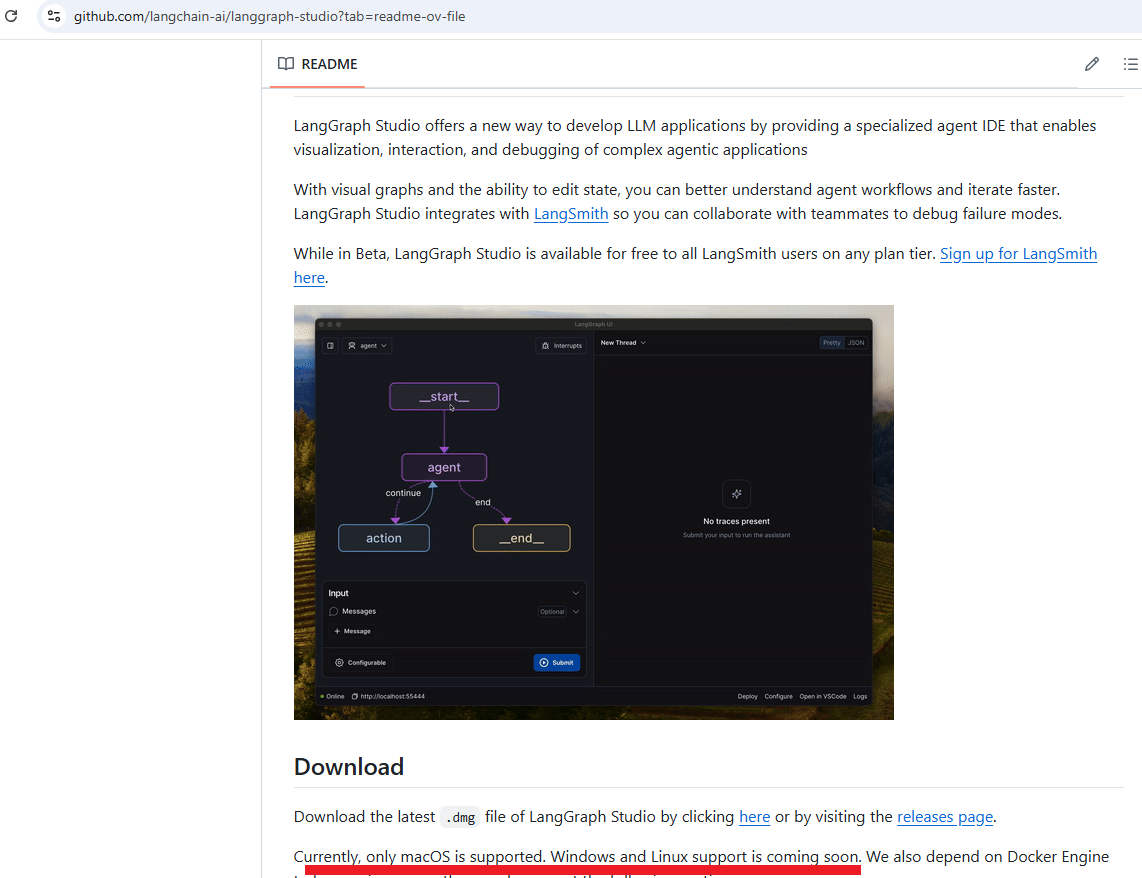

LangGraph Studio offers a new way to develop LLM applications by providing a specialized agent IDE that enables visualization, interaction, and debugging of complex agentic applications.

Currently, only macOS is supported. Windows and Linux support is coming soon.

Figure 22

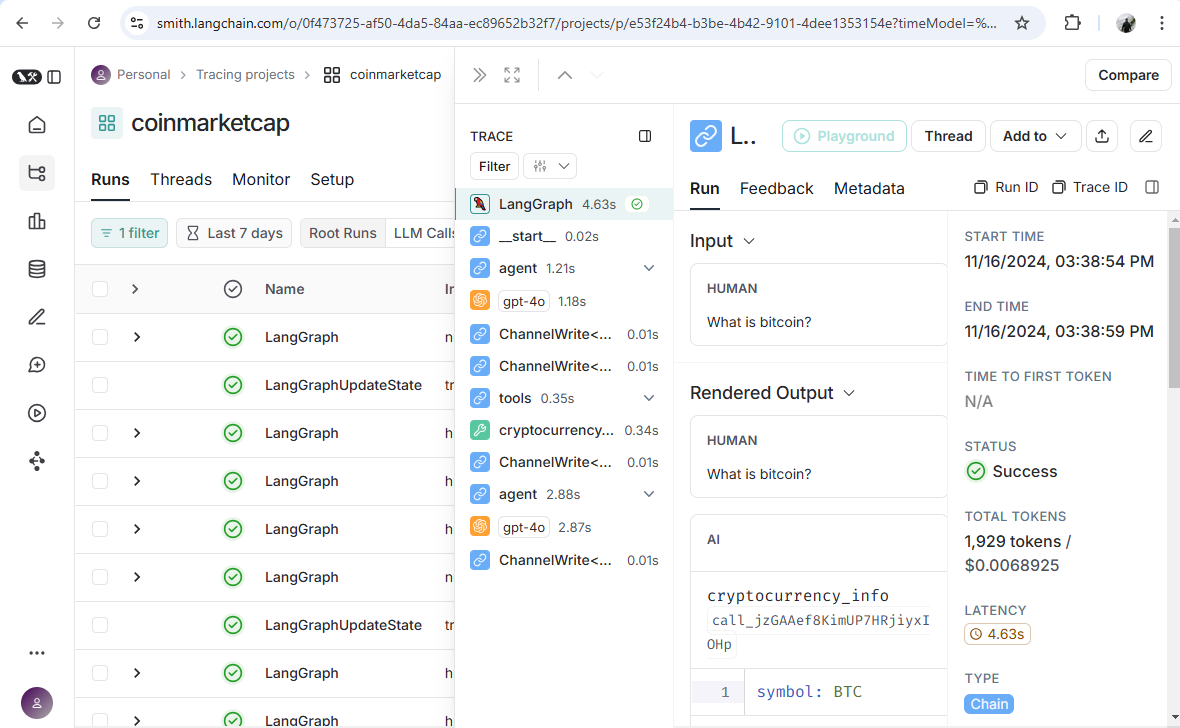

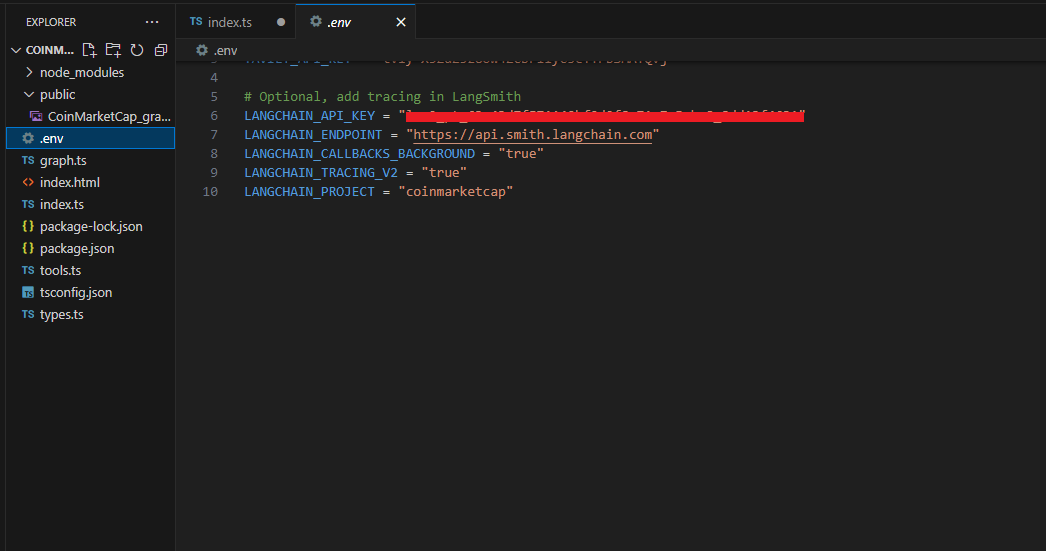

LangSmith is a platform designed to assist with the debugging, testing, and optimization of large language model (LLM) applications, particularly in production environments.

It provides tools to monitor model performance, trace execution flows, and evaluate LLM interactions, ensuring they operate efficiently and as expected.

Figure 23

LangSmith tracing is optional.